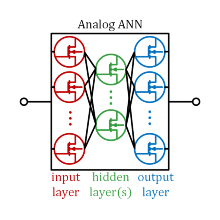

The enormous performance of artificial intelligence for various problems is leading to its use in more and more applications and has triggered a veritable boom, particularly with the introduction of ChatGPT. Such systems are based on artificial neural networks for whose inference the two arithmetic operations multiplication and addition are essentially calculated billions of times.

In conventional digital arithmetic units, many hundreds of MOSFETs are used for the simple multiplication of two numbers in so-called field multipliers and are recharged for each calculation, which consumes a lot of energy during the calculation. This is where INT's new analog computing circuit concept comes in, which was developed as part of the BMBF-funded AI-NET research project. The analog two-quadrant multiplier only requires two field-effect transistors. Current flows through these for only a very brief moment, whereby the multiplication result, mapped as an electrical charge, is calculated. No additional component is even required for the addition, as the physical principle of Kirchhoff's node rule is utilized here. This results in a very large potential for increasing efficiency. Arithmetic units for AI applications on computer chips in data centers and smartphones as well as transmitter and receiver circuits for optical data transmission could thus become significantly smaller and more energy-efficient. Previous studies indicate that analogue multiplication with the new INT technology is around 100 times more energy-efficient than an already greatly reduced and optimized digital FP4 multiplication on the latest generation of Nvidia AI accelerators (GPU Blackwell GB200).

In the project, two ASICs in a 22 nm FDSOI CMOS technology were developed, which confirm the basic functionality of the analog computing cells as well as the low energy consumption through measurement characterization.

The figure shows the second designed ASIC on which several neuronal layers based on the analog computing cells are implemented.

Publications

2024

- J. Finkbeiner, R. Nägele, M. Grözing, M. Berroth, and G. Rademacher, “Characterization of a Femtojoule Voltage-to-Time Converter with Rectified Linear Unit Characteristic for Analog Neural Network Inference Accelerators,” in IEEE International Conference on Artificial Intelligence Circuits and Systems (AICAS), 2024, pp. 253–257.

- J. Finkbeiner, R. Nägele, M. Grözing, M. Berroth, and G. Rademacher, “Ultra-energy-efficient analog multiply-accumulate and rectified linear unit circuit for artificial neural network inference accelerators,” in International Conference on Neuromorphic Computing and Engineering 2024 (ICNCE), 2024.

- R. Nägele, J. Finkbeiner, M. Grözing, M. Berroth, and G. Rademacher, “Characterization of an Analog MAC Cell with Multi-Bit Resolution for AI Inference Accelerators,” in IEEE International Conference on Artificial Intelligence Circuits and Systems (AICAS), 2024, pp. 243–247.

2023

- R. Nägele, J. Finkbeiner, V. Stadtlander, M. Grözing, and M. Berroth, “Analog Multiply-Accumulate Cell with Multi-Bit Resolution for All-Analog AI Inference Accelerators,” IEEE Transactions on Circuits and Systems I: Regular Papers, vol. 2023, pp. 1–13, 2023.

2022

- J. Finkbeiner, R. Nägele, M. Berroth, and M. Grözing, “Design of an Energy Efficient Voltage-to-Time Converter with Rectified Linear Unit Characteristics for Artificial Neural Networks,” in IEEE International New Circuits and Systems Conference (NEWCAS), 2022, pp. 327–331.

- R. Nägele, J. Finkbeiner, M. Berroth, and M. Grözing, “Design of an Energy Efficient Analog Two-Quadrant Multiplier Cell Operating in Weak Inversion,” in IEEE International New Circuits and Systems Conference (NEWCAS), 2022, pp. 5–9.

Additional Information

- Project page of the project sponsor [german]

Automatisierte Telekommunikationsinfrastruktur mit intelligenten autonomen Systemen - Project page of the international consortium

Accelerating digital transformation in Europe by Intelligent NETwork automation - BMBF press release [german]

Karliczek: Leistungsfähige Netzinfrastruktur ist zentrales Nervensystem für Wirtschaft und Gesellschaft - INT press release

INT researchers receive Future Prize for analog AI acceleration - Press release of the Ewald Marquardt Foundation [german]

Private Ewald Marquardt Foundation awards Future Prize 2023

Contact

Jakob Finkbeiner

M. Sc.Research staff member

Raphael Nägele

M. Sc.Research staff member